Introduction

The SSISDB database (a.k.a. the Integration Services catalog) was introduced back in SQL Server 2012 to de-clutter the MSDB database and provide an in-house logging and reporting infrastructure. In a nutshell, SSISDB is an SSIS framework making SQL Server Integration Services more robust and enterprise-friendly by providing:

- Database backup

- Database encryption

- Support for environments

- Support project and package parameters

- Package versioning

- Customer SSRS performance reports built into SSMS

- Deployment directly from SSDT

While SSISDB framework made SSIS much more capable, it came with some Microsoft assumptions (a.k.a. defaults). Those defaults are there to help you to stand on your feet, but might not be optimal when you start running, and are far from perfect when you are sprinting.

This article addresses the defaults, why those defaults might not be optimal, and how to change those defaults.

Problem

SSISDB Catalog ships with a built-in process to clean up operations and project versioning. This cleanup process relies on SSISDB defaults that might make your SSIS inoperable if not changed.

Virtually every new SSIS project starts with building and running just a few packages while there is an abundance of free disk space. Fast forward 3-6 months and you have a number of SSIS projects, tens or hundreds of packages, and you might also have a need for a fresh data for reporting and analytics (read: constantly running packages around the clock). Those SSIS packages might accumulate versioning data (less of a problem) and logging data (more of a problem) way beyond Microsoft cleanup defaults which might lead to SSISDB database size to balloon and grow in size unexpectedly (read: eat up all the available disk space).

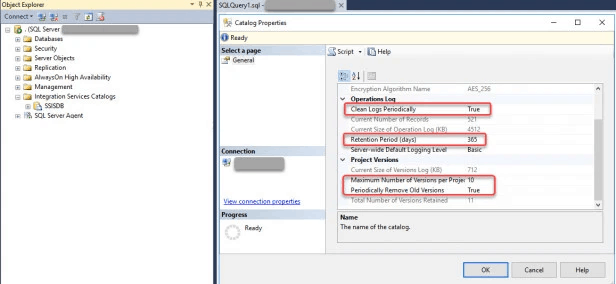

The single default that is responsible to capture SSIS run-time related data is Server-wide Default Logging Level = Basic. This setting is ultimately what manages the SSISDB database size and ultimately taking up the disk space. That being said, SSIS Catalog (Fig 1) provides other options to control the SSISDB database size/disk space:

- Server-wide Default Logging Level

- Clean-up defaults:

- Clean Logs Periodically = True

- Periodically Remove Old Versions = True

- Retention Period (days) = 365

- Maximum Number of Versions per Projects = 10

While changes to Server-wide Default Logging Level from Basic to None to regain disk space is highly unpractical and will make troubleshooting a nightmare, changes to other SSISDB Catalog settings like Maximum Number of Versions per Projects and Retention Period (days) can have minimal effect on troubleshooting, while keeping SSISDB database/disk space in control.

All those defaults are driving the cleanup process (a.k.a. SSIS Server Maintenance Job). While both Clean Logs Periodically = True and Periodically Remove Old Versions = True are virtually always perfect, settings for both Maximum Number of Versions per Projects = 10 and Retention Period (days) = 365 might be overoptimistic. And here is why.

The main reason why both Clean Logs Periodically = True and Periodically Remove Old Versions = True defaults are perfect is, without those defaults (or effectively if both changed to False), SSISDB will grow indefinitely as it will only accumulate log and versioning data without removing any data and run out of disk space.

The main reason why both Maximum Number of Versions per Projects = 10 and Retaining logging data for 365 days might be overoptimistic is Microsoft’s decision to retain the data based on a time period and not size. That means the fixed period number is not taking into account how much data will be accumulated. While Maximum Number of Versions per Projects might grow into a problem once you have thousands of packages, Retention Period (days) is going to become a huge problem once you have packages that are running every few minutes. I don’t think Microsoft ever expected accumulation of log data for 1440 runs a day (running every minute) which will require a cleanup more often than every 365 days or it will run out disk space.

Solution

The solution would involve changing the defaults to smaller numbers, so SSISDB keeps less information. Based on my personal experience of running packages every minute (e-commerce platform requirement) and having up to 10 projects, decreasing Retention Period (days) from 365 (default) to 7 days and Maximum Number of Versions per Projects from 10 (default) to 5 would suffice to control SSISDB/disk space while keeping enough information for debugging and troubleshooting purposes.

You have two options to make this change:

- Change defaults using SQL Server Management Studio (a.k.a. “SSMS”) by expanding Integration Services, right-clicking SSISDB and then clicking on the Properties menu-item (Fig 2 – SSMS).

- Change defaults using T-SQL below to update records in ssisdb.catalog.catalog_properties table directly.

UPDATE ssisdb.CATALOG.catalog_properties

SET property_value = 5

WHERE property_name = 'MAX_PROJECT_VERSIONS'

UPDATE ssisdb.CATALOG.catalog_properties

SET property_value = 7

WHERE property_name = 'RETENTION_WINDOW'

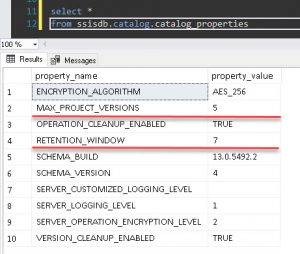

Once the change is completed, you should be able to confirm that changes by running the T-SQL query below (Fig 3 – SSISDB Catalog)

SELECT *

FROM ssisdb.CATALOG.catalog_properties

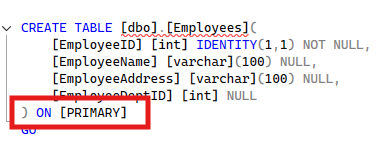

While changing defaults might fix the problem going forward, it might not fix your existing SSISDB disk space problem quick enough. You can speed up the cleanup process by modifying the code and changing @delete_batch_size from 1000 to like 10000 by altering ssisdb.internal.cleanup_server_retention_window stored procedure (Fig 4 – Retention Procedure).

Summary

This article highlighted a potential problem with SSISDB Catalog defaults that might lead to running out of disk space and provided a solution that would force SSISDB to retain less information.

This Post Has 2 Comments

Everytime package runs it has to be pulled from the catalog. What is the impact of using faster or slower disk for the underlying data file(s)? Curious on this impact. For example what if this db was put on ultra fast ssd verses standard ssd?

I would expect in most cases all of that metadata would be in memory. But for a large catalog it could absolutely make a difference.