One of the requirements of ETL is ensuring that bad data does not cause the whole ETL process to stop with an error. Failure to do this results in a very brittle process in which one row of bad data can cause a whole day’s worth of data loading to fail. My favorite war story about this is a customer who had an ETL developer who spent 40% of her time every week keeping the lights on for one ETL process. She had written it many years before and it had become almost half of her job just to keep it running.

The fact of the matter is that computer systems have always had the GIGO (garbage in garbage out) problem. Assuming that data will come in clean is always a recipe for failure. Instead, one must assume the data will come in dirty in a variety of ways (some yet unknown) and handle this bad data in the SSIS package.

The Problem

SSIS does a great job of error logging and providing built-in methods to redirect data to different flows based on many different configurable items. Pretty much anything you can think of to look at and decide where to send it, you can do. What it doesn’t do a very good job of out of the box is providing a generic place to put the bad data in an easily human- readable format for troubleshooting after the fact.

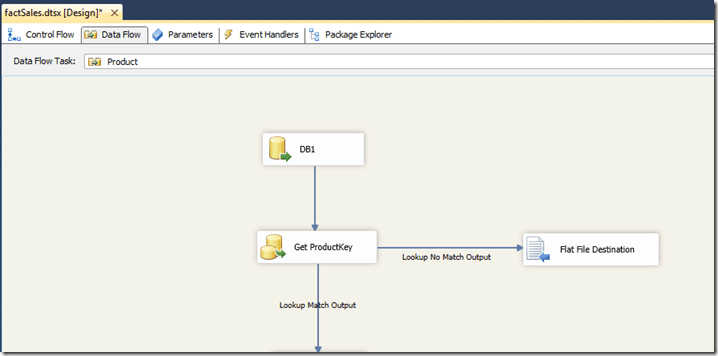

Observe the following common data flow error handling pattern for loading a fact table. A lookup component retrieves the foreign key from a dimension table and sends the row on through the data flow if there is a match. If it doesn’t match, the row is sent elsewhere, in this case to a text file connection. A DBA or analyst then has the responsibility to monitor the text files, find where the error was, fix the issue and reprocess the data. This is what the developer above was spending most of that time on.

There are several problems with this approach:

- I have to have a separate text file for every place in my ETL process where I might encounter bad data.

- All that goes in the text file is a comma-delimited list of data. Not very user friendly when it comes to troubleshooting time.

- I’ll have to come up with a way to dynamically name the text files with a timestamp, etc., in order to not overwrite the file every day. Otherwise yesterday’s bad data may be overwritten in today’s ETL run.

The Solution

In the engagement described above, I was asked to fix the ETL process in question to make it more robust and not so brittle and prone to failure. The customer also wanted an easier way to identify and analyze the bad data so that end user analysts could take over that task and it would not require developer or BA resources. This is the solution I came up with.

Table

First, we need a table in which to store the bad data. In order to prevent having to have a unique table for each place this is used, I decided to store the data as XML in a single table. That way, this becomes a generic solution that can be used regardless of the number of columns, data types, etc.

CREATE TABLE [dbo].[ErrorRows_XML](

[ErrorID] [INT] IDENTITY(1,1) NOT NULL,

[ErrorDate] [DATETIME] NOT NULL,

[ErrorSource] [VARCHAR](500) NULL,

[ErrorCount] [INT] NULL,

[RowData]

[xml][/xml]

NULL, [BatchID] [INT] NULL, CONSTRAINT [PK_EFC_ErrorRows_XML] PRIMARY KEY CLUSTERED ([ErrorID] ASC) ) GO

The ErrorSource column is used to identify where in the ETL process this particular error occurred. I used the following naming convention for ErrorSource: PackageName_DataFlowName_ComponentName. So in the example above the ErrorSource would be factSales_Product_GetProductKey.

The ErrorCount column is used to store how many rows are in this particular dataset. Remember that we are storing all the bad data from one given instance of the error in one row via XML.

RowData contains the actual bad data in XML format.

Finally, in this case I have a BatchID column because this ETL process uses batches and it makes it easy to identify which run the bad data came from. This may not be necessary in all uses of this pattern.

The Package

Continuing with our example, let’s modify the package to write the bad data to the table. We’re going to do that by using a Recordset destination. This destination stores the data in a variable for future use.

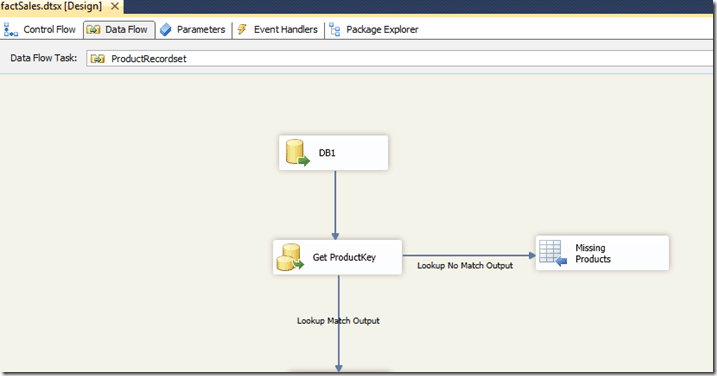

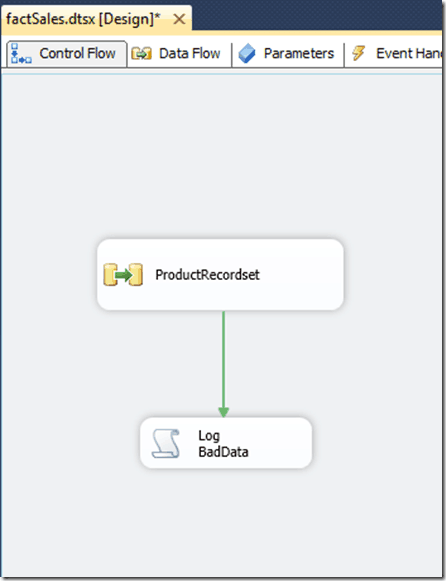

Here is the new data flow for the product lookup. When this data flow runs, the bad data will be stored as an ADO recordset in a variable.

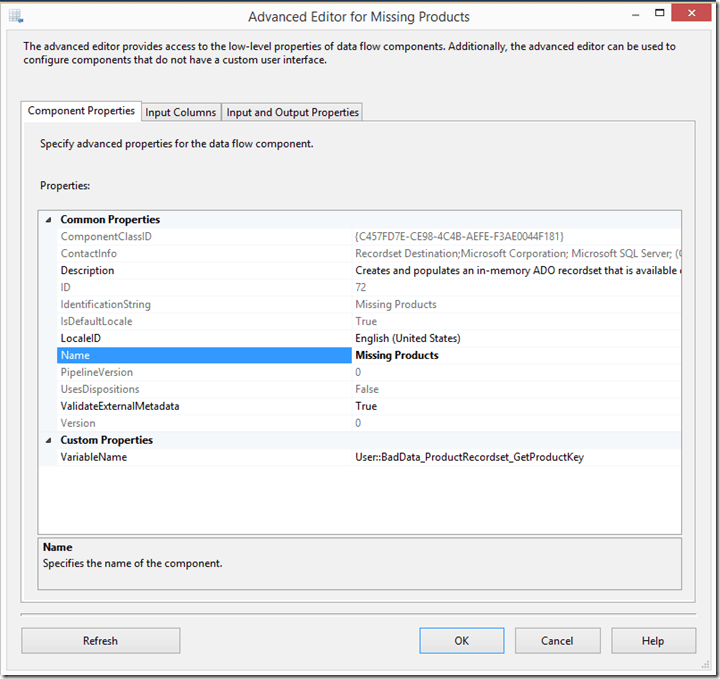

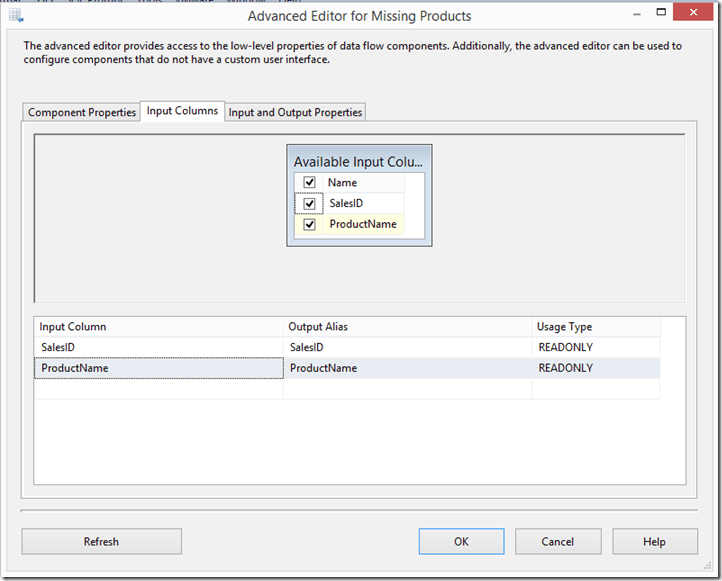

The recordset destination does not have it’s own GUI so we always use the advanced editor to configure it. But it’s configuration is very simple. The only configuration we have to do is choose the variable to send the output to and choose what columns we want to include in the recordset. This should be a package level variable with a data type of Object.

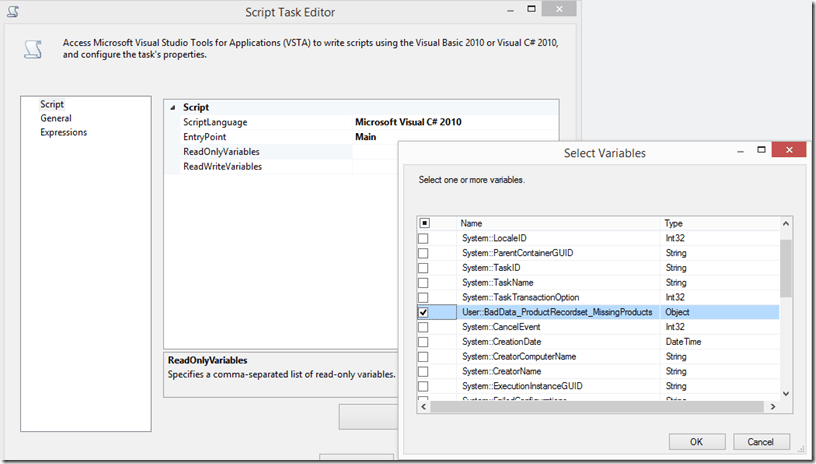

At this point, we have an SSIS variable that contains the bad data. The last step is to add a Script Task to the package to serialize the data to XML and write it to the table.

In the script task properties, we include the object variable as a ReadOnly variable.

The script consists of a WriteError() function that does the heavy lifting. It uses the GetXML method of the ADO dataset object to serialize the data as XML. Then it’s a simple ADO call to write the XML data to the database.

using System;

using System.Data;

using Microsoft.SqlServer.Dts.Runtime;

using System.Windows.Forms;

using System.Xml;

using System.Data.SqlClient;

using System.Data.OleDb;

using System.IO;

namespace ST_d98c4c2b62f94789a154c95495b6588e

{

[Microsoft.SqlServer.Dts.Tasks.ScriptTask.SSISScriptTaskEntryPointAttribute]

public partial class ScriptMain : Microsoft.SqlServer.Dts.Tasks.ScriptTask.VSTARTScriptObjectModelBase

{

public void Main()

{

WriteData("BadData_ProductRecordset_MissingProducts");

Dts.TaskResult = (int)ScriptResults.Success;

}

public void WriteData(string variable)

{

//serialize to xml

OleDbDataAdapter da = new OleDbDataAdapter();

DataTable dt = new DataTable();

DataSet ds = new DataSet();

da.Fill(dt, Dts.Variables[variable].Value);

ds.Tables.Add(dt);

string xml = ds.GetXml();

if (xml != "<NewDataset />")

{

//write to db

string ErrSource = Dts.Variables[variable].Name;

string BatchID = Dts.Variables["BatchID"].Value.ToString();

SqlConnection cn = new SqlConnection();

cn = (SqlConnection)(Dts.Connections["DB1"].AcquireConnection(Dts.Transaction) as SqlConnection);

SqlCommand cmd = new SqlCommand("insert dbo.ErrorRows_XML(ErrorSource,RowData,BatchID) values (@ErrorSource, @XML, @BatchID)");

cmd.Connection = cn;

cmd.Parameters.Add("@ErrorSource", SqlDbType.VarChar).Value = ErrSource;

cmd.Parameters.Add("@XML", SqlDbType.Xml).Value = xml;

cmd.Parameters.Add("@BatchID", SqlDbType.Int).Value = BatchID;

cmd.ExecuteNonQuery();

}

}

}

}

Finally, I create a view for each ErrorSource using XQuery to parse the XML as easy to read data. Ultimately there could be a front end for this in .NET or even SSRS but I haven’t built that. In any case this should be much more dynamic than having to create a view for each error but I just haven’t invested the time. Even so, having an analyst select from a view instead of poring through text files is much more user friendly.

CREATE VIEW [dbo].[vBadData_ProductRecordset_MissingProducts]

AS

SELECT

x.ErrorID

,x.ErrorDate

,x.BatchID

,tbl.col.VALUE(N'(SalesID)[1]',N'bigint') AS SalesID

,tbl.col.VALUE(N'(ProductName)[1]',N'varchar(50)') AS ProductName

FROM dbo.ErrorRows_XML x

cross apply RowData.nodes(N'//Table1') AS tbl(col)

WHERE ErrorSource = 'BadData_ProductRecordset_MissingProducts'

This Post Has 4 Comments

Hello,

nice script, but it has typos and does not compile. You might want to review it before posting it.

1. @BatchID”); should be @BatchID)”);

2. Missing: cmd.Connection = cn;

Thanks for the note. I missed this while reviewing the post. I have fixed the script and reposted.

Thanks to both of you.

Interesting technique. Thanks!