In today’s tech-saturated world, we expect developers to have a broad range of skills and programming languages at their disposal, SQL usually being one of those. This ensures the whole team can help and support one another in their daily lives, but it also leads to mistakes with SQL code.

One potential issue here is the lack of time to delve really deeply into each programming language at one’s disposal. The thing about SQL Server is that it is a language that performs very differently than, say, C#. Those languages are row based, which means it’s common to iterate through a data set and process each row individually. This works quite well in those languages, but quite terribly in SQL Server. Being a set-based language, SQL Server prefers to deal with the whole data set at once.

Because of these nuances, it’s easy for developers to make mistakes when creating SQL server code. Let’s go through some common errors that we see.

T-SQL

Let’s start with basic T-SQL code that we commonly see, which are either not great for performance or have other considerations that are often overlooked.

Select *

You’re probably not surprised that we see this a lot. This is often used because the person writing the code isn’t sure exactly what they’ll need to return from the object they’re querying. Using Select * is not a great idea for a several reasons. Here are things to consider:

- Do you really need all of the data returned? Supporting indexes for queries like this are likely to be wider than necessary in order to cover all of the columns in the table. Wouldn’t it be nice if your indexes were smaller? They’d take less disk space but more importantly would require less I/O to retrieve and occupy less space in memory.

- Do you need to return that nvarchar(max) field that you’re got in your table? Not sure if you’ve got one?

- What if the underlying structure changes and somebody adds in a (max) field? Do they have to check with you first or will your query suddenly tank and cause you and your users a headache? Wouldn’t happen if we were selecting what is strictly necessary for our query.

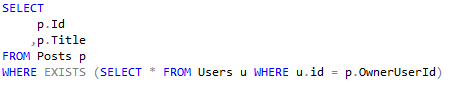

One of the valid uses of SELECT * is when using something like WHERE EXISTS, where you don’t actually need to return any of the data in the query, something like this:

You could even replace that with a SELECT 1 to be more explicit what you’re doing. This is very much personal preference though.

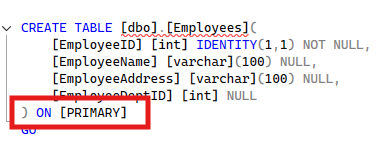

Select Into

Using SELECT..INTO seems like a really easy way of creating tables (whether temporary or permanent), as it takes out a lot of the leg work in defining the structure. An obvious issue here is that your object is created with no constraints (should it be a unique primary key?) and no indexes (do you really want this object to be a heap table?). It also makes troubleshooting more difficult, as if you have something like a truncation issue then you can’t easily see the definition of your object without creating it and going to look for it (do you really want to have to run this code before you can complete the code review?).

Overall, it’s much more controlled if you spend the small amount of time required to declare your object explicitly. You can then make sure it’s exactly what you need and it’s much easier to maintain for those that follow.

Implicit Conversions

We often come across implicit conversions when analysing a server’s most poorly performing queries. This happens when you are comparing two pieces of data that are not the same data type. This could be comparing a column to a variable, or it could equally be joining two tables together. If these fields are not the exact same data type, then SQL Server is going to have to perform an implicit convert and convert one to match the other.

This can cause a couple of separate issues:

- If the server has to convert a column in a table to a different data type then it is not going to be able to use any statistics on the table. These statistics are used when creating the execution plan and will inform how the query is actually run. Without these stats SQL Server can easily make a bad estimate and cause the execution of this query to run very poorly.

- The other reason that this will be slow is that SQL Server will have to scan one of the tables in its entirety and convert this field before it can even consider joining the tables together. The larger the underlying tables get, the worse performance becomes.

The best way to avoid this issue is to ensure that your data types match. Whether that be making sure your variables match the field or if you are joining two tables on a certain field then making sure that the data types match. Also, remember, varchar(10) is not the same as nvarchar(10); they need to be the exact same data type.

Obfuscation

One of the most common issues we see is code obfuscation. What we mean by this is abstracting some code out of what we’re doing and storing it elsewhere. Doing this makes sense to a certain extent. Why write the same code more than once? If we’re going to use it more than once, why not create an object that we can use everywhere to do this? While this sounds like an excellent idea, let’s discuss why it might not be as great as it first appears

Nested Objects

Views

We see so many times that people will write a view to perform some logic and then use it everywhere. Simple right? Well, this grows over time. It may be that this is fine for now, but for a certain subset of queries you want to tweak that a little, so a view is created over the top of this view. This can very easily snowball and then you have views relying on views … relying on views … relying on views (you get the idea).

Primary issues:

- Changing logic can be fraught with danger. What if we have to tweak one of these nested views? Do we have the ability to test every single place where this view is used? Do we even know everywhere it’s used?

- Troubleshooting can be an absolute nightmare. You’ve got some incorrect data in the final query, but which of the sub views is it coming from? It makes a spider’s web of dependencies that are very time consuming and difficult to track. How much time can you invest in resolving this?

- When creating execution plans, SQL Server will only spend so much time investigating these sub views before simply giving up and just running the query however it guesses with no available data. Any ideas how well that’s going to go and how our query is going to perform? Not well.

While views make a lot of sense when used properly, being aware of the nested views issue is something that could save you some major headaches in the future.

Scalar Functions

We see so many functions being used in the wild. Some work well, some not so much. By far the largest problem we come across is the (miss)use of scalar functions. Using a function is extremely common in other languages and they work very well. But there are a couple of very large “gotchas” in SQL Server:

- As soon as you use a scalar function in SQL Server, it forces the transaction to run serially. This means you’re removing any ability for the query to run in parallel, and it therefore will likely dramatically impact the performance of your query. There are some advances here in SQL Server 2022 with Scalar UDF Inlining, but this is a relatively new feature and is not available for everybody.

- Functions are also effectively treated as separate transactions, once called. You won’t see them in execution plans and this makes them extremely difficult to locate, investigate and troubleshoot. It’s super easy to miss them all together.

- Scalar functions are also executed once per row of the data set. Remember how SQL Server is a set-based language and likes dealing with a large data set all at once? Well, scalar functions will pull that particular rug out from under you and turn it into a row-based calculation which will have those very same performance issues.

- You also have the recurring issue of knowing exactly what the function is doing. What parameters are being passed? What’s the actual code that the function is executing? These questions can often be tricky to answer.

In our experience, scalar functions are fine when used correctly (sparingly and not in set-based operations. Think setting a local variable using a function, but it’s extremely easy to use them incorrectly and shoot yourself in the foot using them.

IN (Subqueries)

Something that is very easy to do is to run a query.

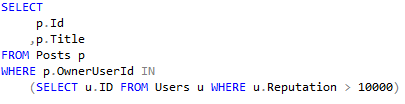

Using the Stack Overflow database, let’s say I wanted to see all posts for all users with more than 10,000 reputation points. I could write something like this;

Seems pretty simple, right? Well, the issue here is what goes on behind the scenes. That IN statement gets parsed out to something like this;

![]()

In the example query here, there would be 25,334 clauses in this statement. That’s a lot of data to parse through and that just won’t scale well within SQL Server. Joins would be the correct way of doing this. Whether a direct join is possible or whether the subquery is better to be separated and the result put into a temp table, that will all depend on the logic of your particular query.

Triggers

Aah, triggers, the silent killers. Sometimes necessary, always costly. The amount of times that we’ve seen poorly performing servers and there are triggers firing all over the place is definitely a non-zero number. They have the same issue as scalar functions, in which they are treated as a separate transaction and won’t be found in execution plans and the like.

Sometimes triggers are necessary, but it’s so easy to introduce them unnecessarily and then suffer the performance impact that follows. Need to do something upon or after insert? There are other ways of resolving those requirements without resorting to Triggers.

Synonyms

We’re glad that a lot of people don’t even know that synonyms are a thing in SQL Server, but when we do find them they can cause major confusion. While we totally understand that the business will often push for the simplest (cheapest) solution to resolve the issue of object renaming, it’ll definitely cause confusion when troubleshooting and ultimately become a maintenance issue.

Linked Servers used Incorrectly

Linked servers can be highly effective when used correctly. The issues that occur are with understanding how linked servers execute queries locally and remotely. If we just include a linked server using four part naming convention ([RemoteServerName].[Database].[Schema].[Object]), then our local server retrieves all data from the remote server and processes data locally. This may be fine if we’re doing a simple query, where we’re selecting all data in a single table. But once we get to adding in filtering and/or manipulating the data in any way (joining for example), then this can get inefficient very quickly.

There has been some change in recent versions of SQL Server on how it deals with these types of connections. Ultimately the better way of processing this data is to either push your results from the remote server and then deal with these results, or to use OPENROWSET. This avoids the issues above and represents best practice for querying linked servers.

Many Database Problem

For companies who are vendors or provide a service to multiple clients, it is not unusual to see a database created for each customer. The thinking here is that it silos each customer’s data into a separate database to ensure there is no risk of data being accessed by the wrong user. This makes sense, right? Sure, but there are definitely some issues that can arise from this:

- Standardisation is a big one. Can you guarantee that your databases are identical to each other? Are they even meant to be? If not, you will have issues performing operations that have to update all databases. Do you need to push a maintenance patch or hotfix? What if some databases have incompatible schemas? You’re going to run into a big headache here really quick.

- Context switching is absolutely an issue you should be considering in this scenario. Because the engine has to switch between databases constantly, it can put a hidden workload onto the server and cause some big issues if not monitored very closely.

- Maintenance is also another thing to consider. Many databases will require many backups, will contain many indexes, and statistics that require maintenance (you’re doing this, right?). Everything becomes a list that needs sorting through before any potential issues can be resolved.

The two main options we have here are to consolidate your customers into fewer databases (we’re aware of how much work this can be to implement) or to simply split your server into smaller servers and host fewer customers on each. In today’s world of virtualisation, this is a lot simpler than it used to be. You just need to monitor them to ensure you don’t have all of your most busy customers on the same server.

Many Tenants on a Host

This one is tricky without having access to the virtualisation layer. We have absolutely seen issues with over provisioning hardware between multiple tenants. It seems obvious that you should consider doing this. Each server is not going to be using 100% of the resources allocated to it, so why not allow them all to have a bit more so you can utilise your hardware?

Let’s say we have 20 CPU cores and 1TB of ram on a server and we have 10 tenants on this host. If we were hard provisioning, we would allocate two CPU cores and 100GB of ram to each of these tenants. That way, everybody always has everything they want. But then we notice that the resources are not often used — we sit around with spare CPU going idle. Why not put a few more servers on this box so we can save money? Makes sense, right? The directors would love if we can provide a cost saving.

The issue comes when you have your busy periods, whether that be the holidays, or whether that’s Monday mornings when your customers come online. What then? Well, the tenants are going to fight for those resources. Your servers are going to think they each have two cores and can use them. But because we’ve now got 20 tenants on this host, they can not necessarily have that many resources at any time.

Seeing this inside SQL Server is extremely difficult and even experienced troubleshooters would find it hard to find that this was the problem.

A similar issue is that of “noisy neighbours”. What if one particular tenant on the host uses most of the resources? There’s not much the other tenants can do about it. They’re going to struggle to do what they need to and there’s nothing they can do about it.

Keeping Connections Open

When connecting to SQL Server, you’ll likely do multiple things, so it makes sense to keep the connection open until you finish the entire workload, right? That can come with some very expensive lessons to learn if this becomes commonplace in your environment.

There are a total of 32,767 user connections available in SQL Server. What happens when these are all used up? You simply cannot make new connections to the server. I will leave the consequences of that to your own imagination.

It’s very much best practice to only keep a connection open for as long as you absolutely require it. The resources required to open a new connection are negligible, true. But the long term consequences of keeping them open can be drastic. It’s also worth noting that you may not have an issue with this right now, but as databases and user counts grow, do you want to risk this or get it right from the beginning?

Debug Mode

It’s rare that a company will add the option to be able to debug SQL code. You may have the error message that something’s throwing, but where specifically is that error coming from? Most of the time somebody will need to extract the T-SQL code piece by piece and run it until you get to the problem code. This can be extremely time consuming and is luckily something that is absolutely avoidable.

By adding a @debug variable and the related code, it will save you so much time. The idea of @debug is that when you enable the flag, it will log relevant information into a logging table so you can analyse it separately.

Adding the variable and related logging isn’t free. It’ll take a little time to ensure it works, but by getting to the standard where code has a debug option, you will make the long-term viability and maintenance so much easier.

This Post Has One Comment

Pingback: Common Mistakes in T-SQL Code – Curated SQL