We frequently see SQL Server instances that use the default memory settings, or settings that do not optimize performance. The default settings are 0 MB for min server memory and 2,147,483,647 MB for max server memory, or more than 2 million GB (keep in mind, memory settings are designated in MB, not GB).

The caveats are: Setting max memory too high means SQL Server will compete for memory with other processes on the same host. Setting it too low impairs performance. Microsoft recommends “75% of available system memory not consumed by other processes.” We prefer to start with a formula popularized by Jonathan Kehayias in this blog post.

1 GB for the OS + 1 GB for each 4GB of RAM between 4-16GB + 1GB for every 8GB above 16GB. So for a server with 128GB of RAM, that would be: 1 + ( ( 16 -4 ) / 3 ) + ( ( 128 – 16) / 8) ) = 19GB. More simply stated, you could say 5GB for the first 16GB of RAM and then 1GB for every 8GB after that.

Whatever formula you use, keep in mind that this is just a starting point. What is really needed is to monitor memory usage / pressure and adjust accordingly. Also keep in mind that the above is for a dedicated SQL Server with no other services. If you are running SSRS, SSIS, or SSAS on the same server, for example, you would need to include the memory those processes use.

Our guidance is: Start with 5GB plus ((TotalGB – 16GB) / 8) and adjust memory based on workload.

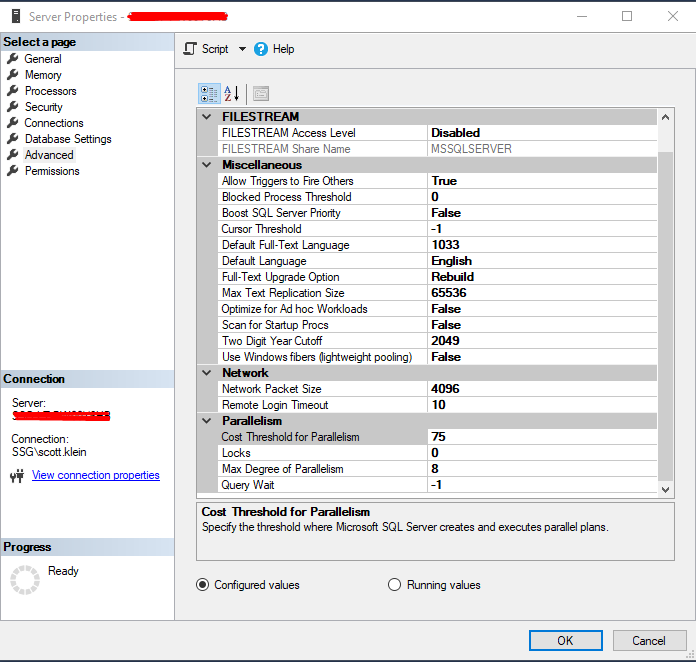

SQL Server memory settings

Max Memory: The upper amount of memory use in the buffer pool. You’re telling the OS how much to allocate to SQL Server. If you’re running 128 GB of RAM and designate 100 GB as max, it will only use (up to) 100 GB. If you allocate all memory, it will use it all. SQL Server is really good at taking all the memory it can, and even better at not giving it back.

Lock pages in memory: This is a Windows policy that determines which accounts can use a process to keep data in physical memory rather than paging to disc. You want to add the SQL Server service account to the policy so that Windows knows to keep SQL Server data in physical memory.

Optimize for ad hoc workloads: A sp_configure setting that you should turn on to improve efficiency of the plan cache for these workloads.

You can also use dbaTools (one of the most useful PowerShell modules you can find) to configure your instances. Test-DbaMaxMemory is your friend here. Think of these as great starting points, and set and monitor rather than set and forget.

This Post Has One Comment

Pingback: Hat mein SQL-Server genug Arbeitsspeicher? – Erhard RAINER