In this blog post, we’ll explore parallelism in SQL Server, a setting that can have a dramatic impact on the performance of your server. In short, this feature can significantly improve query performance by allowing multiple processors or cores to work together to execute a single query. I’ll introduce parallelism, discuss it benefits, and highlight areas where some caution is warranted.

What is Parallelism?

Parallelism is a technique used in computing where a task is divided into smaller sub-tasks which can be executed simultaneously by multiple processors or cores, allowing for faster processing and improved performance. In the context of SQL Server, parallelism allows for the parallel execution of query operations, such as scanning, filtering, joining, and aggregating large result sets, to be divided and processed in parallel by multiple CPU cores. This often leads to faster query execution times.

How Does Parallelism Work in SQL Server?

Parallelism in SQL Server is achieved using parallel query operators and worker threads. When a query is submitted to SQL Server for execution, the query optimizer may decide to parallelize certain operations in the query plan, based on various factors such as query complexity, available system resources, and server configuration settings.

SQL Server uses the “Max Degree of Parallelism” (MAXDOP) setting to determine the number of parallel worker threads that can be used for a parallel query. The MAXDOP is defined as the maximum number of CPU cores that can be used for parallel query processing. It can be configured at different levels, including server-wide, database-wide, and query-specific levels.

When a parallel query is executed, SQL Server divides the query plan into smaller tasks, also known as “query fragments,” and assigns them to different worker threads for parallel execution. Each worker thread independently processes its assigned query fragment and produces intermediate results. These intermediate results are then combined and returned as the final result set to the user.

Benefits of Parallelism in SQL Server

Parallelism in SQL Server can bring significant performance benefits to your database environment, especially for complex queries or queries that involve large result sets. Here are some key advantages of using parallelism in SQL Server:

- Improved Query Performance: Parallel query execution allows SQL Server to leverage the full potential of the underlying hardware by utilizing multiple processor cores. This can significantly reduce the overall query execution time, particularly for resource-intensive operations involving large datasets or complex joins.

- Enhanced Scalability: As the size of your database and the workload increases, parallelism enables SQL Server to scale efficiently. By distributing the workload across multiple processors, SQL Server can handle larger volumes of data and complex queries without sacrificing performance.

- Utilization of Hardware Resources: Parallelism ensures that your hardware resources, such as CPU cores, are fully utilized. Instead of having idle cores while a single query runs, SQL Server can parallelize the workload and maximize the usage of available resources.

- Efficient Utilization of Memory: Parallelism also optimizes the usage of memory by allowing multiple threads to share memory buffers. This reduces the memory requirements for individual queries, enabling SQL Server to handle more concurrent requests without exhausting the available memory.

But Caution, Here Be Monsters

One of the things that Microsoft has not been particularly good at is keeping up with the times when it comes to system settings. This failing includes Parallelism. Ultimately there are two settings that need to be considered here and while Microsoft have gotten better at one, they are still not great at the other.

- Max Degree of Parallelism

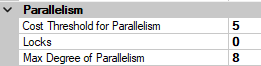

We’ve mentioned this setting, but what’s the best for your environment? This setting depends almost entirely on the number of CPU cores you have available on your server. How many of your valuable cores do you want to allow one query to consume? It’s probably not all of them because then you’ll have contention with other queries waiting for the CPU to become available.

The rule of thumb here is to set this to half the number of cores on your server — up to a maximum of eight. There are calculators out there that can help, but ultimately you’re going to have to tweak this number to suit your environment and workload. Newer versions of SQL do this on install and it works well for a default, but older versions of SQL default to 0.

Go check out your own settings to see how you’re lined up

In SSMS, Right Click Server Name -> Properties -> Advanced

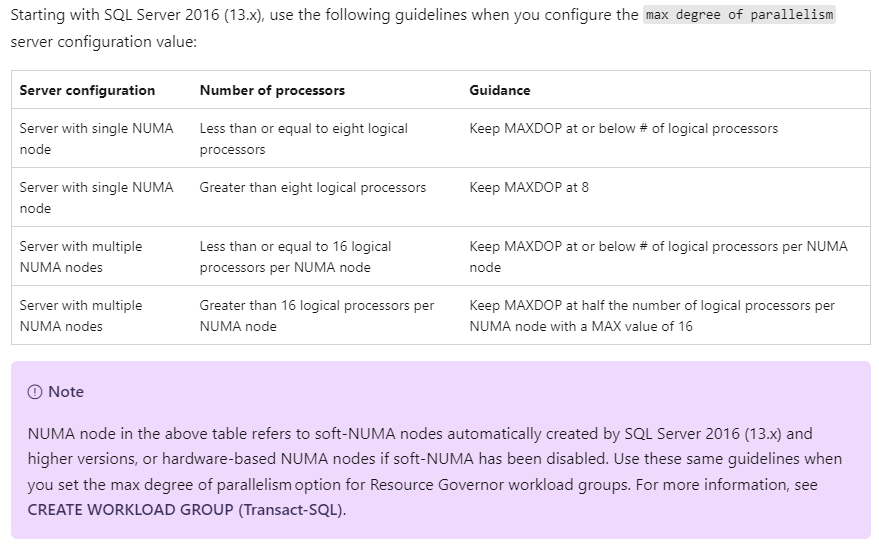

It’s worth noting that as of SQL Server 2016, Microsoft advice on this setting has changed slightly with the introduction of soft NUMA. Soft NUMA nodes can contain a different number of cores than your physical NUMA nodes. These are decided automatically by SQL Server. The purple note here is extremely important:

You can disable soft NUMA if you absolutely need to, but it’s not likely to cause you any issues.

- Cost Threshold of Parallelism

This is the threshold for when a query should go parallel. The ‘cost’ of a query is an abstract number at this point, but it is SQL Server’s determination of how much work your query is going to take to execute.

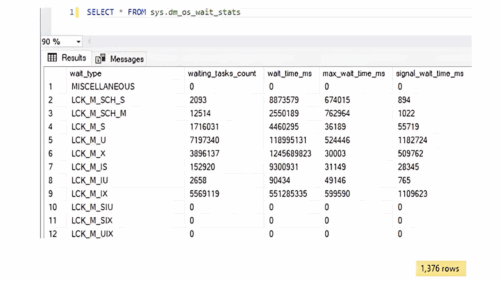

The default for this is 5. This is an incredibly low number, and it means that basically every query on your server is likely to go parallel. This isn’t useful for smaller queries, as it often takes more time to split the query into parallel threads and then gather them back together than it would take to just run the query single threaded!

Every environment is different, but the default we recommend at SSG is to set this to 75. To further fine tune this number, you will want to look at the queries that are executing on your server and decide if you need to alter this threshold either up or down to suit your needs.

Bullying SQL Server

Further to the above, we can decide that we know better than SQL when it comes to particular queries. Say, you have one huge reporting query that runs at month-end and it runs out of hours. With the settings above we’re only going to allow it to run on half our CPU. This may well be inefficient for your workload, and you might want to give this big chungus of a query all of the CPU it can take.

Thankfully, we can use query hints to change this for certain queries. OPTION (MAXDOP) is our friend here. With this little query hint we can give our queries as many CPU cores as they can handle. This is particularly useful for things like Index creation/rebuilds that are blocking operations. You probably want these to go as fast as possible, so make sure you don’t forget your MAXDOP hint!

Here’s the Microsoft Documentation on MAXDOP, in which you can take this a step further and change this at a database level and also include it in any resource governor settings you may have.

Ultimately, you can go with guidelines as a default, but it’s going to be up to you to see how these perform on your system. Do you have a lot of queries that come to around the same cost? Do you want these to go parallel? Answering these questions is going to allow you to work out the best numbers for your environment. Don’t forget that as your data changes, your query plans and costs are likely to change too. This isn’t a set and forget, but rather monitor and tweak as you go.